<file:listener doc:name="On New File" config-ref="File_Config" outputMimeType='application/csv; separator=","'>

<scheduling-strategy >

<fixed-frequency frequency="45" timeUnit="SECONDS"/>

</scheduling-strategy>

<file:matcher filenamePattern="comma_separated.csv" />

</file:listener>DataWeave Output Formats and Writer Properties

|

DataWeave 2.2 is compatible and bundled with Mule 4.2. This version of Mule reached its End of Life on May 2, 2023, when Extended Support ended. Deployments of new applications to CloudHub that use this version of Mule are no longer allowed. Only in-place updates to applications are permitted. MuleSoft recommends that you upgrade to the latest version of Mule 4 that is in Standard Support so that your applications run with the latest fixes and security enhancements. |

DataWeave can read and write many types of data formats, such as JSON, XML, and many others. Before you begin, note that DataWeave version 2 is for Mule 4 apps. For a Mule 3 app, refer to the DataWeave 1.0 documentation set in the Mule 3.9 documentation. For other Mule versions, you can use the version selector for the Mule Runtime table of contents.

DataWeave supports these formats (or MIME types) as input and output:

| MIME Type | Supported Formats |

|---|---|

|

|

|

|

|

DataWeave (weave) (for testing a DataWeave expression) |

|

|

|

|

|

|

|

Octet Stream (for binaries) |

|

|

|

|

|

Newline Delimited JSON (Newline Delimited JSON) |

|

|

|

|

|

|

|

Text Plain (for plain text) |

|

Text Java Properties (Properties) |

DataWeave Readers and Writers

DataWeave can read input data as a whole in-memory, in indexed fashion, and for some data formats, part-by-part by streaming the input. When attempting to read a large file, it is possible to run out of memory or to impact performance negatively. Streaming can improve performance but impacts access to file.

-

Indexed and In-Memory: Allow for random access to data because both strategies parse the entire document. For these strategies, your DataWeave script can access any part of the resulting value at any time.

-

Indexed: Uses indexes over the disk.

-

In-Memory: Parses the entire document in memory.

-

-

Streaming: Allows for sequential access to the file. This strategy partitions the input document into smaller items and accesses its data sequentially, storing the current item in memory. A DataWeave selector can access the portion of the file that is getting read. DataWeave supports streaming for a few formats:

Using Reader and Writer Properties

In some cases, it is necessary to modify or specify aspects of the format

through format-specific properties. For example, you can specify CSV input and

output properties, such as the separator (or delimiter) to use in the CSV file.

For Cobol copybook, you need to specify the path to a schema file using the

schemaPath property.

You can append reader properties to the MIME type (outputMimeType) attribute

for certain components in your Mule app. Listeners and Read operations accept

these settings. For example, this On New File listener example identifies the ,

separator for a CSV input file:

Note that the outputMimeType setting above helps the CSV reader interpret

the format and delimiter of the input comma_separated.csv file, not the writer.

To specify the output format, you can provide the MIME type and any writer

properties for the writer, such as the CSV or JSON writer used by a File Write

operation. For example, you might need to write a pipe (|) delimiter in your

CSV output payload, instead of some other delimiter used in the input. To do

this, you append the property and its value to the output directive of a

DataWeave expression. For example, this Write operation specifies the pipe as

a separator:

<file:write doc:name="Write" config-ref="File_Config" path="my_transform">

<file:content ><![CDATA[#[output application/csv separator="|" --- payload]]]></file:content>

</file:write>The sections below list the format-specific reader and writer properties available for each supported format.

Setting MIME Types

You can specify the MIME type for the input and output data that flows through a Mule app.

For DataWeave transformations, you can specify the MIME type for the output data.

For example, you might set the output header directive of an expression in the

Transform Message component or a Write operation to output application/json or

output application/csv.

This example sets the MIME type through a File Write operation to ensure that a format-specific writer, the CSV writer, outputs the payload in CSV format:

<file:write doc:name="Write" config-ref="File_Config" path="my_transform">

<file:content ><![CDATA[#[output application/csv --- payload]]]></file:content>

</file:write>For input data, format-specific readers for Mule sources (such as the

On New File listener), Mule operations (such as Read and HTTP Request

operations), and DataWeave expressions attempt to infer the MIME type

from metadata that is associated with input payloads, attributes, and

variables in the Mule event. When the MIME type cannot be inferred from

the metadata (and when that metadata is not static), Mule sources and

operations allow you to specify the MIME type for the reader. For example,

you might set the MIME type for the On New File listener to

outputMimeType='application/csv' for CSV file input. This setting provides

information about the file format to the CSV reader.

<file:listener doc:name="On New File"

config-ref="File_Config"

outputMimeType='application/csv'>

</file:listener>Note that reader settings are not used to perform a transformation from one format to another. They simply help the reader interpret the format of the input.

You can also set special reader and writer properties for use by the format-specific reader or writer of a source, operation, or component. See Using Reader and Writer Properties.

Avro

MIME type: application/avro

Avro is a data serialization system.

Writer Properties (for application/avro)

When specifying application/avro as the output format in a DataWeave script,

you can add the following properties to change the way the DataWeave parser

processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

String |

Skips null values in the specified data structure. By default, it does not skip. |

|

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

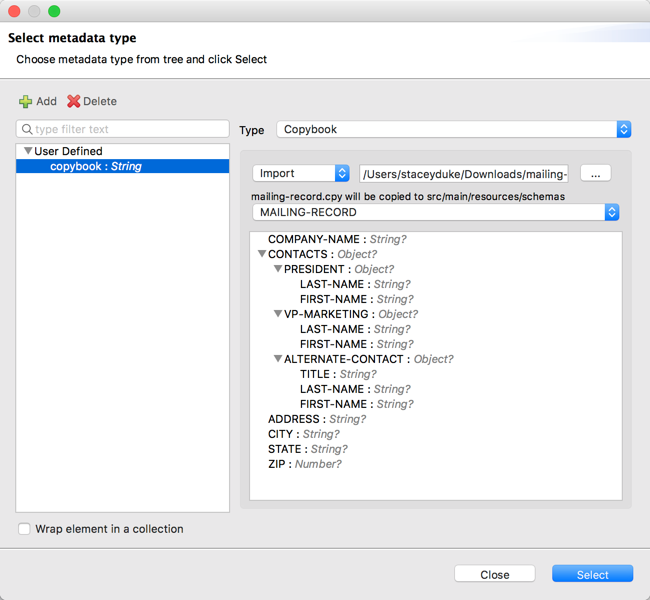

Cobol Copybook

MIME Type: application/flatfile

A Cobol copybook is a type of flat file that describes the layout of records and fields in a Cobol data file.

The Transform Message component provides settings for handling the Cobol copybook format. For example, you can import a Cobol definition into the Transform Message component and use it for your Copybook transformations.

| Cobol copybook in DataWeave supports files of up to 15 MB, and the memory requirement is roughly 40 to 1. For example, a 1-MB file requires up to 40 MB of memory to process, so it’s important to consider this memory requirement in conjunction with your TPS needs for large copybook files. This is not an exact figure; the value might vary according to the complexity of the mapping instructions. |

Importing a Copybook Definition

When you import a Copybook definition, the Transform Message component converts

the definition to a flat file schema that you can reference with schemaPath

property.

To import a copybook definition:

-

Right-click the input payload in the Transform component in Studio, and select Set Metadata to open the Set Metadata Type dialog.

Note that you need to create a metadata type before you can import a copybook definition.

-

Provide a name for your copybook metadata, such as

copybook. -

Select the Copybook type from the Type drop-down menu.

-

Import your copybook definition file.

-

Click Select.

Figure 1. Importing a Copybook Definition File

Figure 1. Importing a Copybook Definition File

For example, assume that you have a copybook definition file

(mailing-record.cpy) that looks like this:

01 MAILING-RECORD.

05 COMPANY-NAME PIC X(30).

05 CONTACTS.

10 PRESIDENT.

15 LAST-NAME PIC X(15).

15 FIRST-NAME PIC X(8).

10 VP-MARKETING.

15 LAST-NAME PIC X(15).

15 FIRST-NAME PIC X(8).

10 ALTERNATE-CONTACT.

15 TITLE PIC X(10).

15 LAST-NAME PIC X(15).

15 FIRST-NAME PIC X(8).

05 ADDRESS PIC X(15).

05 CITY PIC X(15).

05 STATE PIC XX.

05 ZIP PIC 9(5).

-

Copybook definitions must always begin with a

01entry. A separate record type is generated for each01definition in your copybook (there must be at least one01definition for the copybook to be usable, so add one using an arbitrary name at the start of the copybook if none is present). If there are multiple01definitions in the copybook file, you can select which definition to use in the transform from the dropdown list. -

COBOL format requires definitions to only use columns 7-72 of each line. Data in columns 1-5 and past column 72 is ignored by the import process. Column 6 is a line continuation marker.

When you import the schema, the Transform component converts the copybook file

to a flat file schema that it stores in the src/main/resources/schema folder

of your Mule project. In flat file format, the copybook definition above looks

like this:

form: COPYBOOK

id: 'MAILING-RECORD'

values:

- { name: 'COMPANY-NAME', type: String, length: 30 }

- name: 'CONTACTS'

values:

- name: 'PRESIDENT'

values:

- { name: 'LAST-NAME', type: String, length: 15 }

- { name: 'FIRST-NAME', type: String, length: 8 }

- name: 'VP-MARKETING'

values:

- { name: 'LAST-NAME', type: String, length: 15 }

- { name: 'FIRST-NAME', type: String, length: 8 }

- name: 'ALTERNATE-CONTACT'

values:

- { name: 'TITLE', type: String, length: 10 }

- { name: 'LAST-NAME', type: String, length: 15 }

- { name: 'FIRST-NAME', type: String, length: 8 }

- { name: 'ADDRESS', type: String, length: 15 }

- { name: 'CITY', type: String, length: 15 }

- { name: 'STATE', type: String, length: 2 }

- { name: 'ZIP', type: Integer, length: 5, format: { justify: ZEROES, sign: UNSIGNED } }

After importing the copybook, you can use the schemaPath property to reference

the associated flat file through the output directive. For example:

output application/flatfile schemaPath="src/main/resources/schemas/mailing-record.ffd"

Supported Copybook Features

Not all copybook features are supported by the Cobol Copybook format in DataWeave. In general, the format supports most common usages and simple patterns, including:

-

USAGE of DISPLAY, BINARY (COMP), COMP-5, and PACKED-DECIMAL (COMP-3). For character encoding restrictions, see Character Encodings.

-

PICTURE clauses for numeric values consisting only of:

-

'9' - One or more numeric character positions

-

'S' - One optional sign character position, leading or trailing

-

'V' - One optional decimal point

-

'P' - One or more decimal scaling positions

-

-

PICTURE clauses for alphanumeric values consisting only of 'X' character positions

-

Repetition counts for '9', 'P', and 'X' characters in PICTURE clauses (as in

9(5)for a 5-digit numeric value) -

OCCURS DEPENDING ON with controlVal property in schema. Note that if the control value is nested inside a containing structure, you need to manually modify the generated schema to specify the full path for the value in the form "container.value".

-

REDEFINES clause (used to provide different views of the same portion of record data - see details in section below)

Unsupported features include:

-

Alphanumeric-edited PICTURE clauses

-

Numeric-edited PICTURE clauses, including all forms of insertion, replacement, and zero suppression

-

Special level-numbers:

-

Level 66 - Alternate name for field or group

-

Level 77 - Independent data item

-

Level 88 - Condition names (equivalent to an enumeration of values)

-

-

SIGN clause at group level (only supported on elementary items with PICTURE clause)

-

USAGE of COMP-1 or COMP-2 and of clause at group level (only supported on elementary items with PICTURE clause)

-

VALUE clause (used to define a value of a data item or conditional name from a literal or another data item)

-

SYNC clause (used to align values within a record)

REDEFINES Support

REDEFINES facilitates dynamic interpretation of data in a record. When you import a copybook with REDEFINES present, the generated schema uses a special grouping with the name '*' (or '*1', '*2', and so on, if multiple REDEFINES groupings are present at the same level) to combine all the different interpretations. You use this special grouping name in your DataWeave expressions just as you use any other grouping name.

Use of REDEFINES groupings has higher overhead than normal copybook groupings, so MuleSoft recommends that you remove REDEFINES from your copybooks where possible before you import them into Studio.

Character Encodings

BINARY (COMP), COMP-5, or PACKED-DECIMAL (COMP-3) usages are only supported with single-byte character encodings, which use the entire range of 256 potential character codes. UTF-8 and other variable-length encodings are not supported for these usages (because they’re not single-byte), and ASCII is also not supported (because it doesn’t use the entire range). Supported character encodings include ISO-8859-1 (an extension of ASCII to full 8 bits) and other 8859 variations and EBCDIC (IBM037).

REDEFINES requires you to use a single-byte-per-character character encoding for the data, but any single-byte-per-character encoding can be used unless BINARY (COMP), COMP-5, or PACKED-DECIMAL (COMP-3) usages are included in the data.

Common Copybook Import Issues

The most common issue with copybook imports is a failure to follow the Cobol standard for input line regions. The copybook import parsing ignores the contents of columns 1-6 of each line, and ignores all lines with an '*' (asterisk) in column 7. It also ignores everything beyond column 72 in each line. This means that all your actual data definitions need to be within columns 8 through 72 of input lines.

Tabs in the input are not expanded because there is no defined standard for tab positions. Each tab character is treated as a single space character when counting copybook input columns.

Indentation is ignored when processing the copybook, with only level-numbers treated as significant. This is not normally a problem, but it means that copybooks might be accepted for import even though they are not accepted by Cobol compilers.

Both warnings and errors might be reported as a result of a copybook import. Warnings generally tell of unsupported or unrecognized features, which might or might not be significant. Errors are notifications of a problem that means the generated schema (if any) will not be a completely accurate representation of the copybook. You should review any warnings or errors reported and decide on the appropriate handling, which might be simply accepting the schema as generated, modifying the input copybook, or modifying the generated schema.

Reader Properties (for Cobol Copybook)

When defining application/flatfile input for the DataWeave reader, you can set

the properties described in Reader Properties (for Flat File).

Note that schemas with type Binary or Packed don’t allow for the detection

of line breaks, so setting recordParsing to lenient only allows for long

records to be handled, not short ones. These schemas only work with certain

single-byte character encodings (so not with UTF-8 or any multibyte format).

Writer Properties (for Cobol Copybook)

When specifying application/flatfile as the output format in a DataWeave

script, you can add the properties described in Writer Properties (for Flat File)

to change the way the DataWeave parser processes the data.

output application/flatfile schemaPath="src/main/resources/schemas/QBReqRsp.esl", structureIdent="QBResponse"CSV

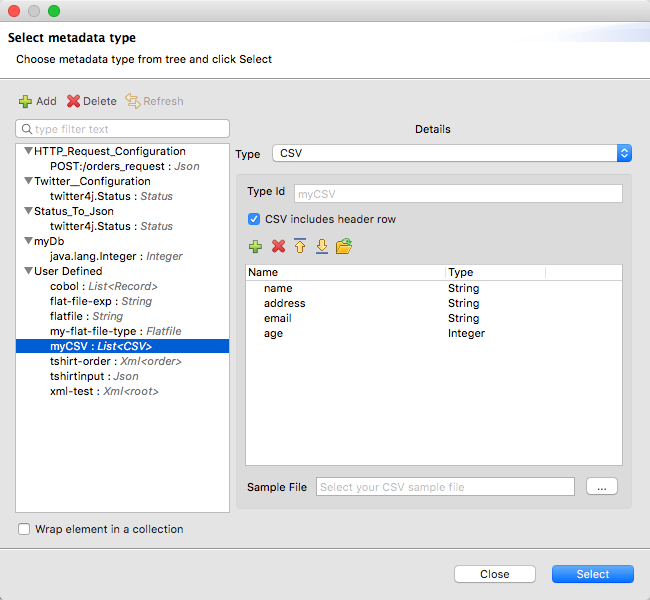

MIME Type: application/csv

CSV content is modeled in DataWeave as a list of objects, where every record is an object and every field in it is a property, for example:

%dw 2.0

output application/csv

---

[

{

"Name":"Mariano",

"Last Name":"De achaval"

},

{

"Name":"Leandro",

"Last Name":"Shokida"

}

]Name,Last Name

Mariano,De achaval

Leandro,ShokidaReader Properties (for CSV)

In CSV, you can assign any special character as the indicator for separating fields, toggling quotes, or escaping quotes. Make sure you know what special characters are in your input so that DataWeave can interpret them correctly.

When defining application/csv input for the DataWeave reader, you can set

the following property.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Number |

|

The line number where the body starts. |

|

Char |

|

Character used to escape invalid characters, such as separators or quotes within field values. |

|

Boolean |

|

Ignores any empty line.

Valid options: |

|

Boolean |

|

Indicates whether the first line of the output

contains header field names. . Valid options: |

|

Number |

|

The line number where the header is located. |

|

Char |

|

Character to use for quotes. |

|

Char |

|

Character that separates one field from another field. |

|

Boolean |

|

Used for streaming input CSV.

Valid options: |

-

When

header=trueyou can then access the fields within the input anywhere by name, for example:payload.userName. -

When

header=falseyou must access the fields by index, referencing first the entry and then the field, for example:payload[107][2]

By default, the CSV reader stores input data from an entire file in-memory

if the file is 1.5MB or less. If the file is larger than 1.5 MB, the process

writes the data to disk. For very large files, you can improve the performance

of the reader by setting a streaming property to true. To demonstrate the use of

this property, the next example streams a CSV file and transforms it to JSON.

<flow name="dw-streamingFlow" >

<scheduler doc:name="Scheduler" >

<scheduling-strategy >

<fixed-frequency frequency="1" timeUnit="MINUTES"/>

</scheduling-strategy>

</scheduler>

<file:read

path="${app.home}/input.csv"

config-ref="File_Config"

outputMimeType="application/csv; streaming=true; header=true"/>

<ee:transform doc:name="Transform Message" >

<ee:message >

<ee:set-payload ><![CDATA[%dw 2.0

output application/json

---

payload map ((row) -> {

zipcode: row.zip

})]]></ee:set-payload>

</ee:message>

</ee:transform>

<file:write doc:name="Write"

config-ref="File_Config1"

path="/path/to/output/file/output.json"/>

<logger level="INFO" doc:name="Logger" message="#[payload]"/>

</flow>-

The example configures the HTTP listener to stream the CSV input by setting

outputMimeType="application/csv; streaming=true". In the Studio UI, you can set the MIME Type on the listener toapplication/csvand the Parameters for the MIME Type to Keystreamingwith Valuetrue. The example also setsheader=truefor illustration purposes, though this setting is the default. -

The DataWeave script uses the

mapfunction in the Transform Message component to iterate over each row in the CSV payload and select the value of each field in thezipcolumn. -

The Write operation returns a file,

output.json, which contains the result of the transformation. -

The Logger prints the same output payload that you see in

output.json.

Note that the example indicates that the input file is located in Studio project

directory src/main/resources, which is the location of ${app.home}.

The structure of the CSV input looks something like the following.

street,city,zip,state,beds,baths,sale_date

3526 HIGH ST,SACRAMENTO,95838,CA,2,1,Wed May 21 00:00:00 EDT 2018

51 OMAHA CT,SACRAMENTO,95823,CA,3,1,Wed May 21 00:00:00 EDT 2018

2796 BRANCH ST,SACRAMENTO,95815,CA,2,1,Wed May 21 00:00:00 EDT 2018

2805 JANETTE WAY,SACRAMENTO,95815,CA,2,1,Wed May 21 00:00:00 EDT 2018

6001 MCMAHON DR,SACRAMENTO,95824,CA,2,1,,Wed May 21 00:00:00 EDT 2018

5828 PEPPERMILL CT,SACRAMENTO,95841,CA,3,1,Wed May 21 00:00:00 EDT 2018Note that a streamed file is typically much longer.

[

{

"zipcode": "95838"

},

{

"zipcode": "95823"

},

{

"zipcode": "95815"

},

{

"zipcode": "95815"

},

{

"zipcode": "95824"

},

{

"zipcode": "95841"

}

]Writer Properties (for CSV)

When specifying application/csv as the output format in a DataWeave script,

you can add the following properties to change the way the DataWeave parser

processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Number |

|

The line number where the body starts. |

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

|

String |

None |

Encoding to be used by this writer,

such as |

|

Char |

|

Character used to escape an invalid character, such as occurrences of the separator or quotes within field values. |

|

String |

Line separator to use when writing the CSV, for example: "\r\n" |

|

|

Boolean |

|

Indicates whether first line of the output

contain header field names. Valid options: |

|

Number |

|

The line number where the header is located. |

|

Boolean |

|

Ignores any empty line.

Valid options: |

|

Char |

|

The character to be used for quotes. |

|

Boolean |

|

Indicates whether to quote header values.

Valid options: |

|

Boolean |

|

Indicates if every value should be quoted (even if it contains special characters within). |

|

String |

|

Character that separates one field from another field. |

All of these parameters are optional.

A CSV output directive example might look like this:

output application/csv separator=";", header=false, quoteValues=trueDataWeave (weave)

MIME Type: application/dw

| This format is for debugging purposes only. Performance impacts can occur if you use this format in a production environment. |

The DataWeave (weave) format is the canonical format for all transformations. This format can help you understand how input data is interpreted before it is transformed to a new format.

|

This format is intended only to help you debug the results of DataWeave transformations. It is significantly slower than other formats. It is not recommended to be used in production applications because it can impact the performance. |

This example shows how XML input is expressed in the DataWeave format.

<employees>

<employee>

<firstname>Mariano</firstname>

<lastname>DeAchaval</lastname>

</employee>

<employee>

<firstname>Leandro</firstname>

<lastname>Shokida</lastname>

</employee>

</employees>{

employees: {

employee: {

firstname: "Mariano",

lastname: "DeAchaval"

},

employee: {

firstname: "Leandro",

lastname: "Shokida"

}

}

} as Object {encoding: "UTF-8", mimeType: "text/xml"}Writer Properties (for weave)

When specifying application/dw as the output format in a DataWeave script,

you can add the following properties to change the way the parser processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

|

Boolean |

|

Indicates whether the writer will

ignore the schema. Valid options: |

|

String |

The string that is going to be used as indent. |

|

|

Number |

|

The maximum number of elements allowed

in an Array or an Object. |

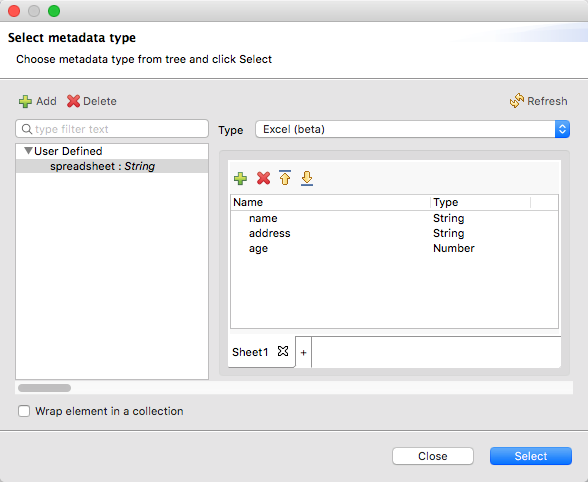

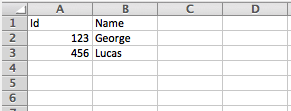

Excel

MIME Type: application/xlsx

Only .xlsx files are supported (Excel 2007). .xls files are not supported

by Mule.

An Excel workbook is a sequence of sheets. In DataWeave, this is mapped to an object where each sheet is a key. Only one table is allowed per Excel sheet. A table is expressed as an array of rows. A row is an object where its keys are the columns and the values the cell content.

output application/xlsx header=true

---

{

Sheet1: [

{

Id: 123,

Name: George

},

{

Id: 456,

Name: Lucas

}

]

}For another example, see Look Up Data in an Excel (XLSX) File.

Reader Properties (for Excel)

When defining application/xlsx input for the DataWeave reader, you can set

the following property.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Boolean |

|

Indicates whether the Excel table contains

headers. Valid options: |

|

Boolean |

|

Indicates whether to ignore empty

line. Valid options: |

|

Boolean |

|

Introduced in Mule 4.2.2: Streaming is intended for processing a large file. When streaming is enabled, the reader accesses each row sequentially, keeping one row in memory at a time, instead of making all data available at once. Streaming does not permit random access to rows in the file. |

|

String |

None |

The position of the first cell in the

table ( |

|

Boolean |

|

If set to |

By default, the Excel reader stores input data from an entire file in-memory

if the file is 1.5MB or less. If the file is larger than 1.5 MB, the process

writes the data to disk. For very large files, you can improve the performance

of the reader by setting a streaming property to true. To demonstrate the use of

this property, the next example streams a XLSX file and transforms it to JSON.

The following example streams an Excel file and transforms it to JSON.

<http:listener-config

name="HTTP_Listener_config"

doc:name="HTTP Listener config" >

<http:listener-connection host="0.0.0.0" port="8081" />

</http:listener-config>

<flow name="streaming_flow" >

<http:listener

doc:name="Listener"

config-ref="HTTP_Listener_config"

path="/"

outputMimeType="application/xlsx; streaming=true"/>

<ee:transform doc:name="Transform Message" >

<ee:message >

<ee:set-payload ><![CDATA[%dw 2.0

output application/json

---

payload."Sheet Name" map ((row) -> {

foo: row.a,

bar: row.b

})]]></ee:set-payload>

</ee:message>

</ee:transform>

</flow>The example:

-

Configures the HTTP listener to stream the XLSX input by setting

outputMimeType="application/xlsx; streaming=true". In the Studio UI, you can use the MIME Type on the listener toapplication/xlsxand the Parameters for the MIME Type to Keystreamingand Valuetrue. -

Uses a DataWeave script in the Transform Message component to iterate over each row in the XLSX payload (an XLSX sheet called

"Sheet Name") and select the values of each cell in the row (usingrow.a,row.b). It assumes columns namedaandband maps the values from each row in those columns intofooandbar, respectively.

Writer Properties (for Excel)

When specifying application/xlsx as the output format in a DataWeave script,

you can add the following properties to change the way the DataWeave parser

processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

|

Boolean |

|

Indicates whether the Excel table contains

headers. Valid options: |

|

Boolean |

|

a Indicates whether to ignore empty

line. Valid options: |

|

String |

None |

The position of the first cell in the table

( |

|

Boolean |

|

If set to |

All of these parameters are optional. A DataWeave output directive for Excel might look like this:

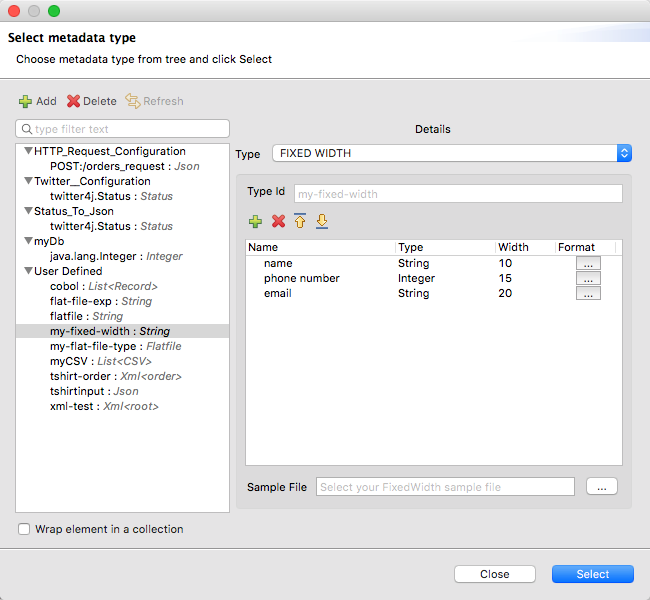

output application/xlsx header=trueFixed Width

MIME Type: application/flatfile

Fixed width types are technically considered a type of Flat File format, but when selecting this option, the Transform component offers you settings that are better tailored to the needs of this format.

| Fixed width in DataWeave supports files of up to 15 MB, and the memory requirement is roughly 40 to 1. For example, a 1-MB file requires up to 40 MB of memory to process, so it’s important to consider this memory requirement in conjunction with your TPS needs for large fixed width files. This is not an exact figure; the value might vary according to the complexity of the mapping instructions. |

Reader Properties (for Fixed Width)

When defining application/flatfile input for the DataWeave reader, you can set

the properties described in Reader Properties (for Flat File).

Note that schemas with type Binary or Packed don’t allow for the detection

of line breaks, so setting recordParsing to lenient only allows for long

records to be handled, not short ones. These schemas only work with certain

single-byte character encodings (so not with UTF-8 or any multibyte format).

Writer Properties (for Fixed Width)

When specifying application/flatfile as the output format in a DataWeave

script, you can add the properties described in Writer Properties (for Flat File)

to change the way the DataWeave parser processes the data.

All of the properties are optional.

A DataWeave output directive might look like this:

output application/flatfile schemaPath="src/main/resources/schemas/payment.ffd", encoding="UTF-8"Flat File

MIME Type: application/flatfile

Flat file supports multiple types of fixed width records within a single message. The schema structure allows you to define how different record types are distinguished, and how the records are logically grouped.

| Flat file in DataWeave supports files of up to 15 MB, and the memory requirement is roughly 40 to 1. For example, a 1-MB file requires up to 40 MB of memory to process, so it’s important to consider this memory requirement in conjunction with your TPS needs for large flat files. This is not an exact figure; the value might vary according to the complexity of the mapping instructions. |

Reader Properties (for Flat File)

When defining application/flatfile input for the DataWeave reader, you can set

the following property.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Boolean |

|

Error if required value missing.

Valid options: |

|

String |

|

Fill character used to represent missing values. To represent missing values in the input data, you can use:

|

|

String |

|

Expected separation between lines/records:

|

|

String |

None |

Schema definition. Location in your local disk of the schema file used to parse your input. |

|

String |

None |

Segment identifier in the schema for fixed width or copybook schemas (only needed when parsing a single segment/record definition and if the schema includes multiple segment definitions). |

|

String |

None |

Structure identifier in schema for flatfile schemas (only needed when parsing a structure definition, and if the schema includes multiple structure definitions) |

|

Boolean |

|

Truncate COBOL

copybook DEPENDING ON values to length used. Valid options: |

|

Boolean |

|

Use the |

Note that schemas with type Binary or Packed don’t allow for line break

detection, so setting recordParsing to lenient only allows long records

to be handled, not short ones. These schemas also currently only work with

certain single-byte character encodings

(so not with UTF-8 or any multibyte format).

Writer Properties (for Flat File)

When specifying application/flatfile as the output format in a DataWeave

script, you can add the following properties to change the way the DataWeave

parser processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

|

String |

None |

Encoding to be used by this writer,

such as |

|

Boolean |

|

Error if a required value is missing.

Valid options: |

|

String |

|

Fill character used to represent missing values:

|

|

String |

System property |

Record separator line break. Valid options:

Note that in Mule versions 4.0.4 and older, this is only used as a separator

when there are multiple records. Values translate directly to character codes

( |

|

String |

None |

Schema definition. Path where the schema file to be used is located. |

|

String |

None |

Segment identifier in the schema for fixed width or copybook schemas (only needed when writing a single segment/record definition, and if the schema includes multiple segment definitions). |

|

String |

None |

Structure identifier in schema for flatfile schemas (only needed when writing a structure definition and if the schema includes multiple structure definitions) |

|

Boolean |

|

Trim string values longer than the field length by truncating trailing characters. Valid options: |

|

Boolean |

|

Truncate DEPENDING ON COBOL

copybook values to length used. Valid options: |

|

Boolean |

|

Use the |

%dw 2.0

output application/flatfile schemaPath="src/main/resources/test-data/QBReqRsp.esl", structureIdent="QBResponse"

---

payloadMultipart (Form-Data)

MIME Type: multipart/form-data

DataWeave supports multipart subtypes, in particular form-data. These formats

enable you to handle several different data parts in a single payload,

regardless of the format each part has. To distinguish the beginning and end of

a part, a boundary is used and metadata for each part can be added through

headers.

Below you can see a raw multipart/form-data payload with a 34b21 boundary

consisting of 3 parts:

-

a

text/plainone namedtext -

an

application/jsonfile (a.json) namedfile1 -

a

text/htmlfile (a.html) namedfile2

--34b21

Content-Disposition: form-data; name="text"

Content-Type: text/plain

Book

--34b21

Content-Disposition: form-data; name="file1"; filename="a.json"

Content-Type: application/json

{

"title": "Java 8 in Action",

"author": "Mario Fusco",

"year": 2014

}

--34b21

Content-Disposition: form-data; name="file2"; filename="a.html"

Content-Type: text/html

<!DOCTYPE html>

<title>

Available for download!

</title>

--34b21--Within a DataWeave script, you can access and transform data from any of the

parts by selecting the parts element. Navigation can be array based or key

based when parts feature a name to reference them by. The part’s data can be

accessed through the content keyword while headers can be accessed through

the headers keyword.

The following script, for example, would produce Book:a.json considering

the previous payload:

%dw 2.0

output text/plain

---

payload.parts.text.content ++ ':' ++ payload.parts[1].headers.'Content-Disposition'.filenameYou can generate multipart content where DataWeave builds an object with a list of parts, each containing its headers and content. The following DataWeave script produces the raw multipart data (previously analyzed) if the HTML data is available in the payload.

%dw 2.0

output multipart/form-data

boundary='34b21'

---

{

parts : {

text : {

headers : {

"Content-Type": "text/plain"

},

content : "Book"

},

file1 : {

headers : {

"Content-Disposition" : {

"name": "file1",

"filename": "a.json"

},

"Content-Type" : "application/json"

},

content : {

title: "Java 8 in Action",

author: "Mario Fusco",

year: 2014

}

},

file2 : {

headers : {

"Content-Disposition" : {

"filename": "a.html"

},

"Content-Type" : payload.^mimeType

},

content : payload

}

}

}Notice that the key determines the part’s name if it is not explicitly

provided in the Content-Disposition header, and note that DataWeave can

handle content from supported formats, as well as references to unsupported

ones, such as HTML.

Reader Properties (for Multipart)

When defining multipart/form-data input for the DataWeave reader, you can set

the following property.

You can set the boundary for the reader to use when it analyzes the data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

String |

None |

The multipart boundary value. A String to delimit parts. |

Note that in the DataWeave read function, you can also pass the property as

an optional parameter. The scope of the property is limited to the DataWeave

script where you call the function.

Writer Properties (for Multipart)

When specifying multipart/form-data as the output format in a DataWeave

script, you can add the following property to change the way the DataWeave

parser processes data.

output multipart/form-dataIn the output directive, you can also set a property for the writer to use when it outputs the data in the specified format.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

String |

None |

The multipart boundary value. A String to delimit parts. |

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

For example, if a boundary is 34b21, then you can pass this:

output multipart/form-data boundary=34b21Note that in the DataWeave write function, you can also pass the property as

an optional parameter. The scope of the property is limited to the DataWeave

script where you call the function.

|

Multipart is typically, but not exclusively, used in HTTP where the boundary is

shared through the |

Java

MIME Type: application/java

This table shows the mapping between Java objects to DataWeave types.

| Java Type | DataWeave Type |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Writer Properties (for Java)

When specifying application/java as the `output format in a DataWeave

script, you can add the following property to change the way the DataWeave

parser processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Boolean |

False |

If duplicate keys are detected in

an object, the writer will change the value to an array with all those values.

Valid options: |

|

Boolean |

|

If a key has attributes, it will put

them as children key-value pairs of the key that contains them. The attribute

key name will start with @. Valid options: |

Custom Types (for Java)

There are a couple of custom Java types:

-

class -

Enum

Metadata Property class (for Java)

Java developers use the class metadata key as a hint for what class needs to

be created and sent as an input. If this is not explicitly defined, DataWeave

tries to infer from the context or it assigns it the default values:

-

java.util.HashMapfor objects -

java.util.ArrayListfor lists

%dw 2.0

type user = Object { class: "com.anypoint.df.pojo.User"}

output application/json

---

{

name : "Mariano",

age : 31

} as userThe code above defines the type of the required input as an instance of

com.anypoint.df.pojo.User.

Enum Custom Type (for Java)

In order to put an enum value in a java.util.Map, the DataWeave Java module

defines a custom type called Enum. It allows you to specify that a given

string should be handled as the name of a specified enum type. It should always

be used with the class property with the Java class name of the enum.

JSON

MIME Type: application/json

Writer Properties (for JSON)

When specifying application/json as the output format in a DataWeave script,

you can add the following properties to change the way the DataWeave parser

processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

|

Boolean |

|

If duplicate keys are detected

in an object, the write will change the value to an array with all those values.

Valid options: |

|

String |

|

The character set to use for the output. |

|

Boolean |

|

Indicates whether to indent the JSON code for

better readability or to compress the JSON into a single line.

Valid options: |

|

String |

None |

Skips null values in the specified data

structure. By default it does not skip. Valid options: |

output application/json indent=false, skipNullOn="arrays"Reader Properties (for JSON)

When defining application/json input for the DataWeave reader, you can set

the following property.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Boolean |

|

Used for streaming input. Use only if

entries are accessed sequentially. Valid options: |

To demonstrate streaming, the following example streams a JSON file by reading each element in an array one at a time.

<file:config name="File_Config" doc:name="File Config" />

<flow name="dw-streaming-jsonFlow" >

<scheduler doc:name="Scheduler" >

<scheduling-strategy >

<fixed-frequency frequency="1" timeUnit="MINUTES"/>

</scheduling-strategy>

</scheduler>

<file:read doc:name="Read"

config-ref="File_Config"

path="${app.home}/myjsonarray.json"

outputMimeType="application/json; streaming=true"/>

<ee:transform doc:name="Transform Message" >

<ee:message >

<ee:set-payload ><![CDATA[%dw 2.0

output application/json

---

payload.myJsonExample map ((element) -> {

returnedElement : element.zipcode

})]]></ee:set-payload>

</ee:message>

</ee:transform>

<file:write doc:name="Write"

path="/path/to/output/file/output.json"

config-ref="File_Config1"/>

<logger level="INFO" doc:name="Logger" message="#[payload]"/>

</flow>-

The streaming example configures the HTTP listener to stream the JSON input by setting

outputMimeType="application/json; streaming=true". In the Studio UI, you can set the MIME Type on the listener toapplication/jsonand the Parameters for the MIME Type to Keystreamingand Valuetrue. -

The DataWeave script in the Transform Message component iterates over the array in the input payload and selects its

zipcodevalues. -

The Write operation returns a file,

output.json, which contains the result of the transformation. -

The Logger prints the same output payload that you see in

output.json.

The JSON input payload looks like the following.

{ "myJsonExample" : [

{

"name" : "Shoki",

"zipcode": "95838"

},

{

"name" : "Leandro",

"zipcode": "95823"

},

{

"name" : "Mariano",

"zipcode": "95815"

},

{

"name" : "Cristian",

"zipcode": "95815"

},

{

"name" : "Kevin",

"zipcode": "95824"

},

{

"name" : "Stanley",

"zipcode": "95841"

}

]

}[

{

"returnedElement": "95838"

},

{

"returnedElement": "95823"

},

{

"returnedElement": "95815"

},

{

"returnedElement": "95815"

},

{

"returnedElement": "95824"

},

{

"returnedElement": "95841"

}

]Skip Null On (for JSON)

You can use the skipNullOn writer property to omit null values from arrays, objects, or both.

When set to:

-

arraysIgnore and omit

nullvalues from JSON output, for example,output application/json skipNullOn="arrays". -

objectsIgnore an object that has a null value. The output contains an empty object (

{}) instead of the object with the null value, for example,output application/json skipNullOn="objects". -

everywhereApply

skipNullOnto arrays and objects, for example:output application/json skipNullOn="everywhere".

Newline Delimited JSON

MIME type: application/x-ndjson

Writer Properties (for ndjson)

When specifying application/x-ndjson as the output format in a DataWeave

script, you can add the following properties to change the way the DataWeave

parser processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Boolean |

|

Valid options: |

|

String |

||

|

Number |

|

Size of the buffer writer. |

|

String |

Valid options: |

|

|

Boolean |

|

When set to |

Octet Stream

MIME Type: application/octet-stream

Writer Properties (for octet-stream)

When specifying application/octet-stream as the output format in a

DataWeave script, you can add the following properties to change the way

the DataWeave parser processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

Text Plain

MIME Type: text/plain

Writer Properties (for text/plain)

When specifying text/plain as the output format in a DataWeave script,

you can add the following properties to change the way the DataWeave parser

processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

String |

None |

Encoding for the writer to use. |

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

Text Java Properties

MIME Type: text/x-java-properties

Writer Properties (for properties)

When defining text/x-java-properties output in the DataWeave output

directive, you can change the way the parser behaves by adding optional

properties.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

String |

None |

Encoding for the writer to use. |

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

XML

MIME Type: application/xml

The XML data structure is mapped to DataWeave objects that can contain other objects as values to their keys. Repeated keys are supported.

<users>

<company>MuleSoft</company>

<user name="Leandro" lastName="Shokida"/>

<user name="Mariano" lastName="Achaval"/>

</users>{

users: {

company: "MuleSoft",

user @(name: "Leandro",lastName: "Shokida"): "",

user @(name: "Mariano",lastName: "Achaval"): ""

}

}Reader Properties (for XML)

When defining application/xml input for the DataWeave reader, you can set

the following properties.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Number |

|

The maximum number of entity expansions. The limit is in place to avoid Billion Laughs attacks. |

|

Boolean |

|

If the indexed XML reader should be

used when the threshold is reached. Valid options: |

|

String |

|

If a tag with empty or blank text should

be read as null. Valid options: |

|

Boolean |

|

Indicates whether external entities

should be processed or not. By default this is disabled to avoid XXE attacks.

Valid options: |

|

|

|

Enable or disable DTD support. Disabling skips (and does not process) internal and external subsets. Valid Options are |

Writer Properties (for XML)

When specifying application/xml as the output format in a DataWeave script,

you can add the following properties to change the way the DataWeave parser

processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

Number |

|

Size of the buffer writer. |

|

String |

None |

Encoding for the writer to use. |

|

Boolean |

|

When set to |

|

Boolean |

|

Indicates whether to indent the output.

Valid options: |

|

String |

|

When the writer should use inline close

tag. Valid options: |

|

String |

None |

Valid options: |

|

Boolean |

|

Whether to write a nil attribute when

the value is null. Valid options: |

|

String |

None |

Skips null values in the specified data

structure. By default it does not skip. Valid options: |

|

Boolean |

|

Indicates whether to write the XML

header declaration. Valid options: |

output application/xml indent=false, skipNullOn="attributes"The inlineCloseOn parameter defines whether the output is structured like this (the default):

<someXml>

<parentElement>

<emptyElement1></emptyElement1>

<emptyElement2></emptyElement2>

<emptyElement3></emptyElement3>

</parentElement>

</someXml>It can also be structured like this (set with a value of empty):

<payload>

<someXml>

<parentElement>

<emptyElement1/>

<emptyElement2/>

<emptyElement3/>

</parentElement>

</someXml>

</payload>See also, Example: Outputting Self-closing XML Tags.

Skip Null On (for XML)

You can specify whether your transform generates an outbound message that

contains fields with "null" values, or if these fields are ignored entirely.

This can be set through an attribute in the output directive named skipNullOn,

which can be set to three different values:

elements, attributes, or everywhere.

When set to:

-

elements: A key:value pair with a null value is ignored. -

attributes: An XML attribute with a null value is skipped. -

everywhere: Apply this rule to both elements and attributes.

Defining a Metadata Type (for XML)

In the Transform component, you can define a XML type through the following methods:

-

By providing a sample file

-

By pointing to a schema file

CData Custom Type (for XML)

MIME Type: application/xml

CData is a custom data type for XML that is used to identify a CDATA XML

block. It can tell the writer to wrap the content inside CDATA or to check

if the input string arrives inside a CDATA block. CData inherits from the

type String.

%dw 2.0

output application/xml

---

{

users:

{

user : "Mariano" as CData,

age : 31 as CData

}

}<?xml version="1.0" encoding="UTF-8"?>

<users>

<user><![CDATA[Mariano]]></user>

<age><![CDATA[31]]></age>

</users>URL Encoding

MIME Type: application/x-www-form-urlencoded

A URL encoded string is mapped to a DataWeave object:

-

You can read the values by their keys using the dot or star selector.

-

You can write the payloads by providing a DataWeave object.

Here is an example of x-www-form-urlencoded data:

key=value&key+1=%40here&key=other+value&key+2%25The following DataWeave script produces the data above:

output application/x-www-form-urlencoded

---

{

"key" : "value",

"key 1": "@here",

"key" : "other value",

"key 2%": null

}You can read in the Data above as input to the DataWeave script

in the next example to return value@here as the result.

output text/plain

---

payload.*key[0] ++ payload.'key 1'Note that there are no reader properties for URL encoded data.

Writer (for URL Encoded Data)

When specifying application/x-www-form-urlencoded as the `output format in

a DataWeave script, you can add the following properties to change the way the

DataWeave parser processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

String |

None |

Encoding for the writer to use. |

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

-

output application/x-www-form-urlencoded -

output application/x-www-form-urlencoded encoding="UTF-8", bufferSize="500"

Note that in the DataWeave write function, you can also pass the property as

an optional parameter. The scope of the property is limited to the DataWeave

script where you call the function.

YAML

MIME Type: application/yaml

Writer Properties (for YAML)

When specifying application/yaml as the output format in a DataWeave script,

you can add the following properties to change the way the DataWeave parser

processes data.

| Parameter | Type | Default | Description |

|---|---|---|---|

|

String |

|

Encoding for the writer to use. |

|

Number |

|

Size of the buffer writer. |

|

Boolean |

|

When set to |

|

String |

None |

Skips null values in the specified data

structure. By default it does not skip. Valid options:

|